Responsible AI: How evaluagent builds trust, not risk

The undoubted power of Artificial Intelligence (AI) comes with real worries and responsibilities, particularly concerns over how providers use their customers’ data.

At evaluagent, we recognise these concerns and that’s why we develop to an ethical, and customer-centric standard often called ‘responsible AI’. In this article, we’ll explore what responsible AI means, why it matters, and how we make it possible for organizations like yours to embrace the hugely transformative benefits of AI whilst mitigating risk.

At its core, Responsible AI gives the customer complete transparency over how the system works, what happens to data and why the model gives each answer. This is in contrast to ‘black box’ AI systems, where decision-making and data use is hidden from the customer.

A lot of providers, particularly in domains like CX, take a black box approach because it’s less work and gives them freedom to use customer data to train their ‘proprietary’ models. We believe customers deserve better, so have put in the extra effort to develop all our systems in a responsible way.

Taking this approach builds trust – not just with customers, but amongst your team too, helping to bring about better outcomes.

There are four key reasons why Responsible AI is critical:

Most enterprises now have their own secure internal AI programs. These enterprises, and increasingly their counterparts in the mid-market, are becoming more aware and (rightly) protective of not relinquishing their customers’ data to murky third parties engaged in training proprietary black box models. Without a responsible approach to AI, you’re unlikely to pass the increasingly high transparency and accountability thresholds to deploy in these environments.

Organizations are under increasing reputational pressure to account for all uses of personal data to train AI models. Concerns about privacy breaches and data misuse are driving a shift towards responsible practices that prioritize data security and integrity.

Global regulations, such as the EU AI Act, are setting stricter guidelines for ethical AI usage. These regulations demand transparency and accountability, particularly in areas like data privacy and performance evaluation. Companies adopting a responsible approach to AI are better positioned to comply with these evolving standards.

As we shift to the Agentic AI paradigm, where specific tasks or workflows are handled by AI Agents working on top of a central AI architecture, we expect most businesses to build their own LLM, data store and infrastructure. Deploying your tools onto these in-house architectures is virtually impossible for black box services, which depend on their proprietary models to function. In contrast, responsible AI vendors will find it easy to replace their standard backend with a company’s own, making them future proof for the agentic age.

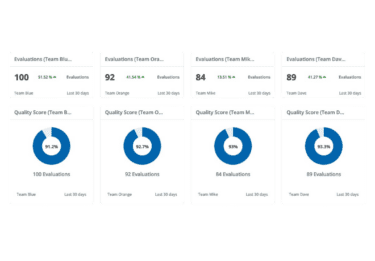

At evaluagent, we’ve built a suite of tools and practices that embody responsible AI. We offer lots of different ways for you to feel confident in how your contact center uses AI in practice.

evaluagent’s responsible AI practices offer reassurance, transparency, and flexibility without compromising the business outcomes you expect to achieve. Whether clients choose to run on our state-of-the-art infrastructure or their own secure systems, they can trust that their data remains protected, and their AI processes are accountable.

By prioritizing responsible AI, we’re not just meeting today’s standards but setting a foundation for the future. This commitment to responsible AI does not hinder your ability to innovate. Our platform is ready to integrate seamlessly into even the most complex environments, ensuring that organizations can innovate without compromising trust, security, or compliance.

At evaluagent, we’re committed to helping businesses like yours harness the power of AI responsibly while achieving your desired business goals. If you have any questions or would like to learn more about our approach, reach out to us for a chat.

Get in touch with our expert team to discover how evaluagentCX could transform your approach to customer service.

Book a demo today