The ultimate guide to QA scoring

Many people regard scoring as being the most difficult part of the Quality Assurance (QA) process. In this blog we look at scoring in more detail and discuss:

If you’re just beginning with QA, check out our dedicated blog on scoring tips for new evaluators.

There are many reasons for QA scoring but the most common three are to:

Scoring an individual interaction is interesting but it can also be misleading. It’s only when multiple scores are aggregated and analyzed that a real picture is provided.

This is really powerful when presenting trends over a period of time as these can be used to highlight development needs and in time, demonstrate improvement.

By breaking down an interaction into components and scoring each part, you get a detailed analysis of that interaction, which can be broken down to identify strong and weak areas. The more detail, the more accurate the score will be.

One word of warning about QA scoring: Some people try to benchmark against others. This will only work if there are identical questions used in scoring and the service being delivered is comparable. If they’re not, then it’s not a valid benchmark. It’s much better to focus on trends internally and look for continuous improvement.

Top tip: Focus on improving the overall trend of QA scores for continuous improvement.

How do you ensure that the score reflects the quality of the interaction? This is very dependant on the quality scorecard or scoring mechanism developed.

The starting point is to ensure that the question, or scoring criteria, reflects what’s important.

The next stage is to look at the relative importance of each score when compared with the others. For example, there may be some compliance line items that are compulsory, so we could naturally assume this is more important than the agent introducing themselves by name.

The way we handle this is by giving each question a weighting. Using the previous example, the compliance matter may be 10, but the use of the name may be 5.

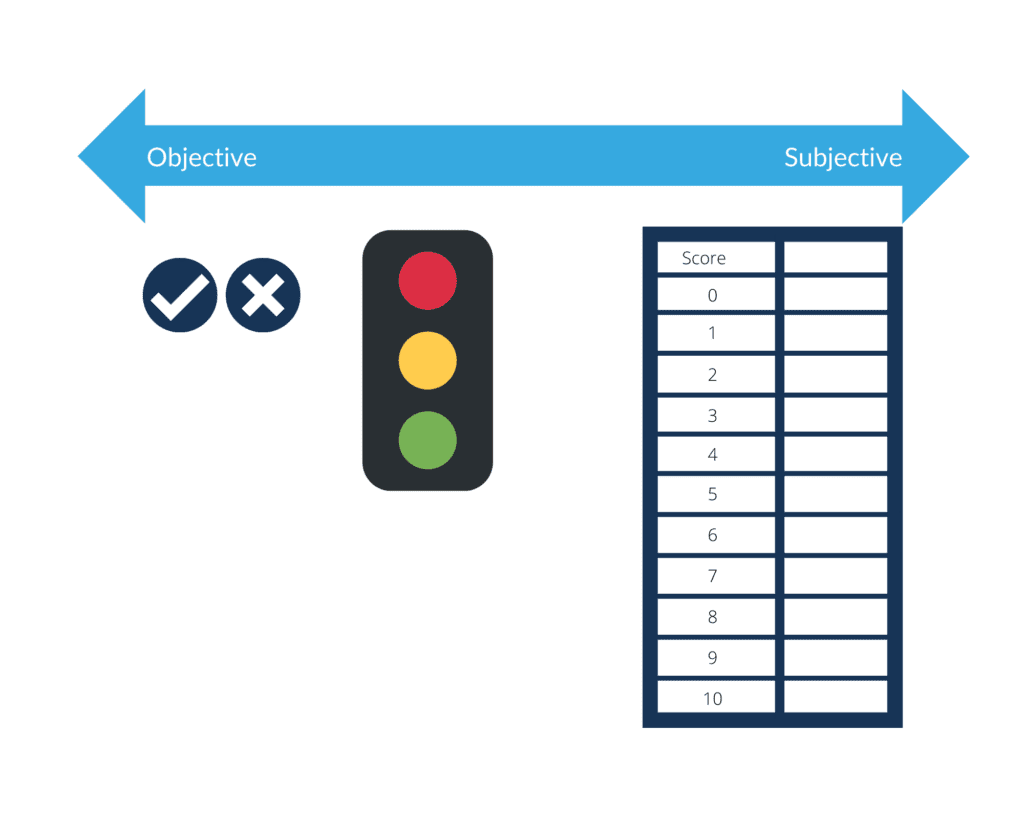

We’re going to discuss three types of scoring methods: binary, traffic light, and numerical score.

This is as simple as it gets: yes or no.

For example: Was the compliance statement made correctly and in full? Yes or no.

This is good when looking objectively at whether the compliance statement was delivered but it does not support assessment of how the statement was delivered.

The traffic light scoring (sometimes known as ‘RAG’ for Red, Amber, Green) adds another option between ‘yes’ or’ ‘no’, a partial ‘yes’, or a ‘yes but not all’.

It can often be based on whether a call has passed or failed its quality stage. You need to take care with traffic light scoring, as the amber stage is very variable. Make sure you know internally what this means for your business – calibration sessions can help.

This is typically a range (e.g. 0 – 10), where the evaluator determines the score for that interaction as a position on the scale.

This can be the most difficult for evaluators as it’s often regarded as a subjective score. The way to make it more objective is to provide details of what is expected from each score on the scale with examples being used to show how scores should be allocated – you can see an example in our Complaints scorecard.

This is the most useful way to determine accurate changes in performance as it provides the level of detail required to highlight the difference in quality between different interactions.

The different types of scoring all have strengths and weaknesses but actually depend upon the level of objectivity/subjectivity being measured. The following scale highlights where each of the above fits.

The following table summarises the types of scoring:.

| Binary | Traffic Light | Numerical | |

| Score | Yes/No | Red Amber Green | 0 – 10 |

| Measure | Objective | Objective | Subjective |

| Uses | Compliance | Compliance with variable | Variable scoring where difference can be identified |

| Strengths | Easy to score | Provides an option for ‘some’ or ‘partial’ | Enables a detailed score that differentiates performance |

| Weakness | Can only be yes or no | Amber can be very variable | Difficult to clearly identify score |

| Notes | Limited opportunity to track performance in detail | Does not provide clarity of performance | Needs a definition for each level |

One of the most successful methods of scoring is to use a mixture of scoring on a single scorecard. If you have a range of scores between 0 and 10 then you do not have to offer every score for every question. Using the compliance question as an example, the question may be…

Was the compliance statement read out in full?

On a numerical scale, the choice of answers would be 0 or 10, as there is no point in-between. However, for most areas there will be a greater variance.

Was the compliance statement delivered in a way that the customer understood it?

This demonstrates a need for more variable scoring, and could be subjective depending on the evaluator.

Of course, the weighting discussed earlier will have an impact upon any final score.

Finally, many organisations make the mistake of having to receive a minimum score for the interaction to pass. This is okay until a particular area for scoring becomes inappropriate.

For example, it isn’t likely to be appropriate to ask a very angry customer if there is anything else that you can help them with. In this case, the question should be removed from the scoring without penalising the agent.

There are many example scorecards out there that you can use to get started, but we’d always recommend that these are adapted for your contact center, reflecting the specifics of that organisation and the services it provides.

Check out these 3 scorecard templates made specifically for customer support teams.

There are a huge number of variations of the above scoring types and how they can be used. Outputs are reported as a number, a percentage or for some even just a number within a range.

It’s important to retain the same method in order to enable trend analysis and track performance. Continually changing the scores and the way in which they are calculated will only lead to confusion, and undermine any meaningful comparison.

Looking for new ways of scoring is common as evaluators look to change the way in which they complete their roles. An example is where some scoring mechanisms start with a perfect score and then reduce it for areas that are missed or not applied correctly. This can be quite negative, a better alternative is having a positive approach for including the correct ingredients/competencies in a call.

Most evaluators find scoring difficult when they start.

Having clear scoring criteria as helps, but for many it’s all about getting experience and gaining confidence.

Some useful tips are:

With the inevitable nature of scoring being partially subjective, it’s really important to ensure that scoring is fair and consistent. This can be checked by working with other people to check samples, have calibration sessions with Evaluators and Agents to ensure that the levels are all the same and observing others when they are scoring.

Consistency is important and essential when providing feedback to Agents.

There are numerous reasons for scoring the quality of customer interactions, but the most common are to provide quality assurance, give feedback to Agents and improve performance.

There are also different types of scoring, but these need to be based on a set of evaluation criteria that are aligned to the organisation and the service it delivers. Initially, scoring is not easy but it does get much easier with experience and confidence. Having a good understanding of the ingredients that are being measured really helps!

A Quality Score is a great metric, yes, but QA can go way beyond just presenting a score to your business. Find out how scoring fits into the rest of the QA process.

For more resources on Quality Assurance, employee engagement, coaching and feedback and other CX topics, head over to the Knowledge Hub.